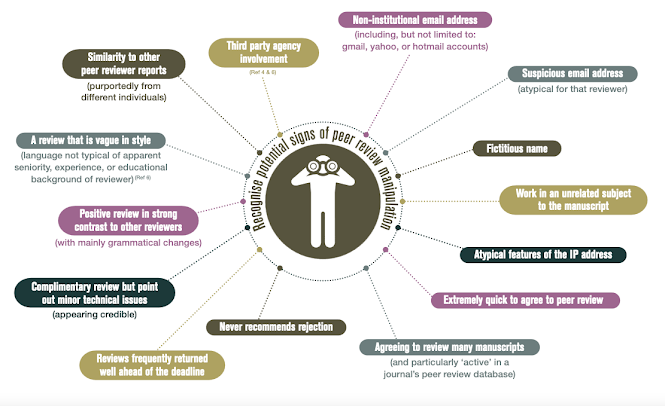

How to spot fake reviewers: a beginner's guide

For those unfamiliar with or new to academic publishing, peer review manipulation is hard to spot. This is mainly because most peer review reports are still confidential documents that stay buried deep in academic journal's editorial offices. As long as this continues to be the default, recognising good and bad peer reviews is difficult enough... now we have to worry about fake peer review as well?! 😒

Well, yes. Yes, we do. The peer review process in academic publishing is unlike any other. The style and content of a manuscript are criticized, as are the thought processes that birthed it. Everything from word choice to significance of the research focus is under scrutiny. In traditional peer review formats, reviewers recommend that manuscripts be accepted or rejected. This much power may make some less scrupulous people guiddy... or yearning to rig the system.

The refereeing work that is supposed to be one of the garantors of research integrity is itself highly susceptible to manipulation.

- Reviewers are invited to assess a manuscript via email. But many don't supply their institutional or ORCID-verified email addresses. This means that fake accounts run by the authors themselves or their accomplices may be mistakenly contacted.

- Some reviewers are recommended by authors, but are they truly qualified to assess the work? Some elaborate peer review circles —where a group of authors agree to favourably review each others’ manuscripts— have already been uncovered.

- The anonymous nature of the process may lend itself to some researchers inappropriately promoting their own work via manipulation of the citations suggested.

- Some researchers go even further and generate completely ficticious characters that seem to be quite eager and keen peer reviewers.

Comments

Post a Comment