I took matters in my own hands

Yep. I did.

And decided I would write the guidelines for peer review I wish I had been given.

In the traditional publishing model, journal editors choose reviewers based on their expertise. Reviewers can now also choose to post comments on preprints that they have a particular interest in, based on their own research experience.

Crowd-sourced models have also begun to flourish. But there is no definition of what a GOOD peer review is and how to recognise it.

We aim to provide curated resources for training, define quality standards, and raise pertinent questions that evolved from the current context in the publishing industry.

We want to help maintain integrity in the peer review process, by involving the community in assessing their own training needs.

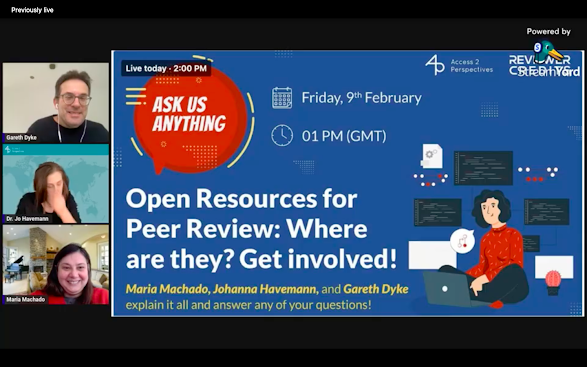

Together with some colleagues (Gareth and Jo), we came up with a crowdsourced initiative.

And we got some ideas, wishes, and plans.

We hope to improve transparency and accountability in the peer review process, enhance the reproducibility and reliability of published research, and foster a collaborative and collegial environment among researchers, publishers, and librarians.

And why, you may ask?

Teaching peer review is hard. We need all the help we can get. And there are just too many resources out there in the wild for them to be equally useful and effective. Some of them are just going to be... meh.

So, we need your help assessing them! Are you interesting in learning about peer review and rating that learning experience? Please get in touch and join the gang!

Comments

Post a Comment